Facebook has never before made public the guidelines its moderators use to decide whether to remove violence, spam, harassment, self-harm, terrorism, intellectual property theft, and hate speech from social network until now. The company hoped to avoid making it easy to game these rules, but that worry has been overridden by the public’s constant calls for clarity and protests about its decisions. Today Facebook published 25 pages of detailed criteria and examples for what is and isn’t allowed.

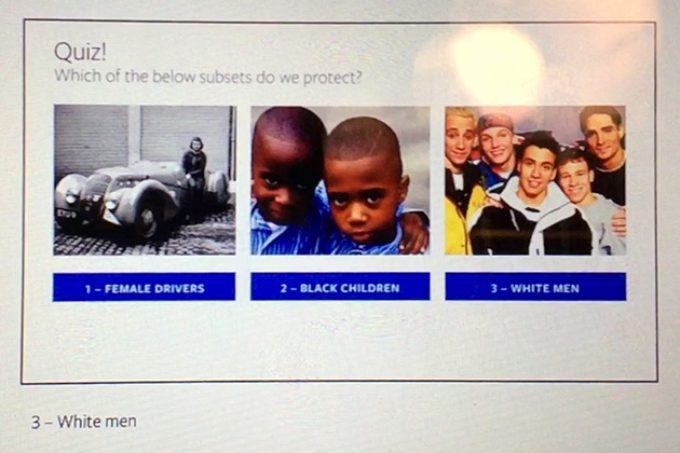

Facebook is effectively shifting where it will be criticized to the underlying policy instead of individual incidents of enforcement mistakes like when it took down posts of the newsworthy “Napalm Girl” historical photo because it contains child nudity before eventually restoring them. Some groups will surely find points to take issue with, but Facebook has made some significant improvements. Most notably, it no longer disqualifies minorities from shielding from hate speech because an unprotected characteristic like “children” is appended to a protected characteristic like “black”.

Facebook is effectively shifting where it will be criticized to the underlying policy instead of individual incidents of enforcement mistakes like when it took down posts of the newsworthy “Napalm Girl” historical photo because it contains child nudity before eventually restoring them. Some groups will surely find points to take issue with, but Facebook has made some significant improvements. Most notably, it no longer disqualifies minorities from shielding from hate speech because an unprotected characteristic like “children” is appended to a protected characteristic like “black”.

Nothing is technically changing about Facebook’s policies. But previously, only leaks like a copy of an internal rulebook attained by the Guardian had given the outside world a look at when Facebook actually enforces those policies. These rules will be translated into over 40 languages for the public. Facebook currently has 7500 content reviewers, up 40% from a year ago.

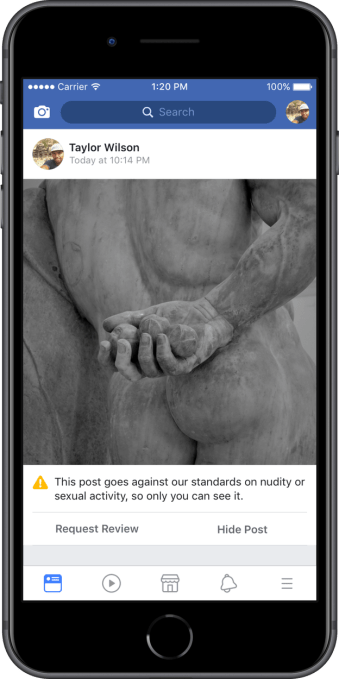

Facebook also plans to expand its content removal appeals process, It already let users request a review of a decision to remove their profile, Page, or Group. Now Facebook will notify users when their nudity, sexual activity, hate speech or graphic violence content is removed and let them hit a button to “Request Review”, which will usually happen within 24 hours. Finally, Facebook will hold Facebook Forums: Community Standards events in Germany, France, the UK, India, Singapore, and the US to give its biggest communities a closer look at how the social network’s policy works.

Fixing the “white people are protected, black children aren’t” policy

Facebook’s VP of Global Product Management Monika Bickert who has been coordinating the release of the guidelines since September told reporters at Facebook’s Menlo Park HQ last week that “There’s been a lot of research about how when institutions put their policies out there, people change their behavior, and that’s a good thing.” She admits there’s still the concern that terrorists or hate groups will get better at developing “workarounds” to evade Facebook’s moderators, “but the benefits of being more open about what’s happening behind the scenes outweighs that.”

Content moderator jobs at various social media companies including Facebook have been described as hellish in many exposes regarding what it’s like to fight the spread of child porn, beheading videos, racism for hours a day. Bickert says Facebook’s moderators get trained to deal with this and have access to counseling and 24/7 resources, including some on-site. They can request to not look at certain kinds of content they’re sensitive to. But Bickert didn’t say Facebook imposes an hourly limit on how much offensive moderators see per day like how YouTube recently implemented a four-hour limit.

A controversial slide depicting Facebook’s now-defunct policy that disqualified subsets of protected groups from hate speech shielding. Image via ProPublica

The most useful clarification in the newly revealed guidelines explains how Facebook has ditched its poorly received policy that deemed “white people” as protected from hate speech, but not “black children”. That rule that left subsets of protected groups exposed to hate speech was blasted in a ProPublica piece in June 2017, though Facebook said it no longer applied that policy.

Now Bickert says “Black children — that would be protected. White men — that would also be protected. We consider it an attack if it’s against a person, but you can criticize an organization, a religion . . . If someone says ‘this country is evil’, that’s something that we allow. Saying ‘members of this religion are evil’ is not.” She explains that Facebook is becoming more aware of the context around who is being victimized. However, Bickert notes that if someone says “‘I’m going to kill you if you don’t come to my party’, if it’s not a credible threat we don’t want to be removing it.”

Do community standards = editorial voice?

Being upfront about its policies might give Facebook more to point to when it’s criticized for failing to prevent abuse on its platform. Activist groups say Facebook has allowed fake news and hate speech to run rampant and lead to violence in many developing countries where Facebook hasn’t had enough native speaking moderators. The Sri Lankan government temporarily blocked Facebook in hopes of ceasing calls for violence, and those on the ground say Zuckerberg overstated Facebook improvements to the problem in Myanmar that led to hate crimes against the Rohingya people.

Revealing the guidelines could at least cut down on confusion about whether hateful content is allowed on Facebook. It isn’t. Though the guidelines also raise the question of whether the Facebook value system it codifies means the social network has an editorial voice that would define it as a media company. That could mean the loss of legal immunity for what its users post. Bickert stuck to a rehearsed line that “We are not creating content and we’re not curating content”. Still, some could certainly say all of Facebook’s content filters amount to a curatorial layer.

But whether Facebook is a media company or a tech company, it’s a highly profitable company. It needs to spend some more of the billions it earns each quarter applying the policies evenly and forcefully around the world.